Of Robots and Men

Nickolay: Ms. Wirth, you have been investigating the impact of automation and digitization on human labor at the Museum of Applied Arts in Vienna for years. How do these topics influence art projects nowadays?

Wirth:

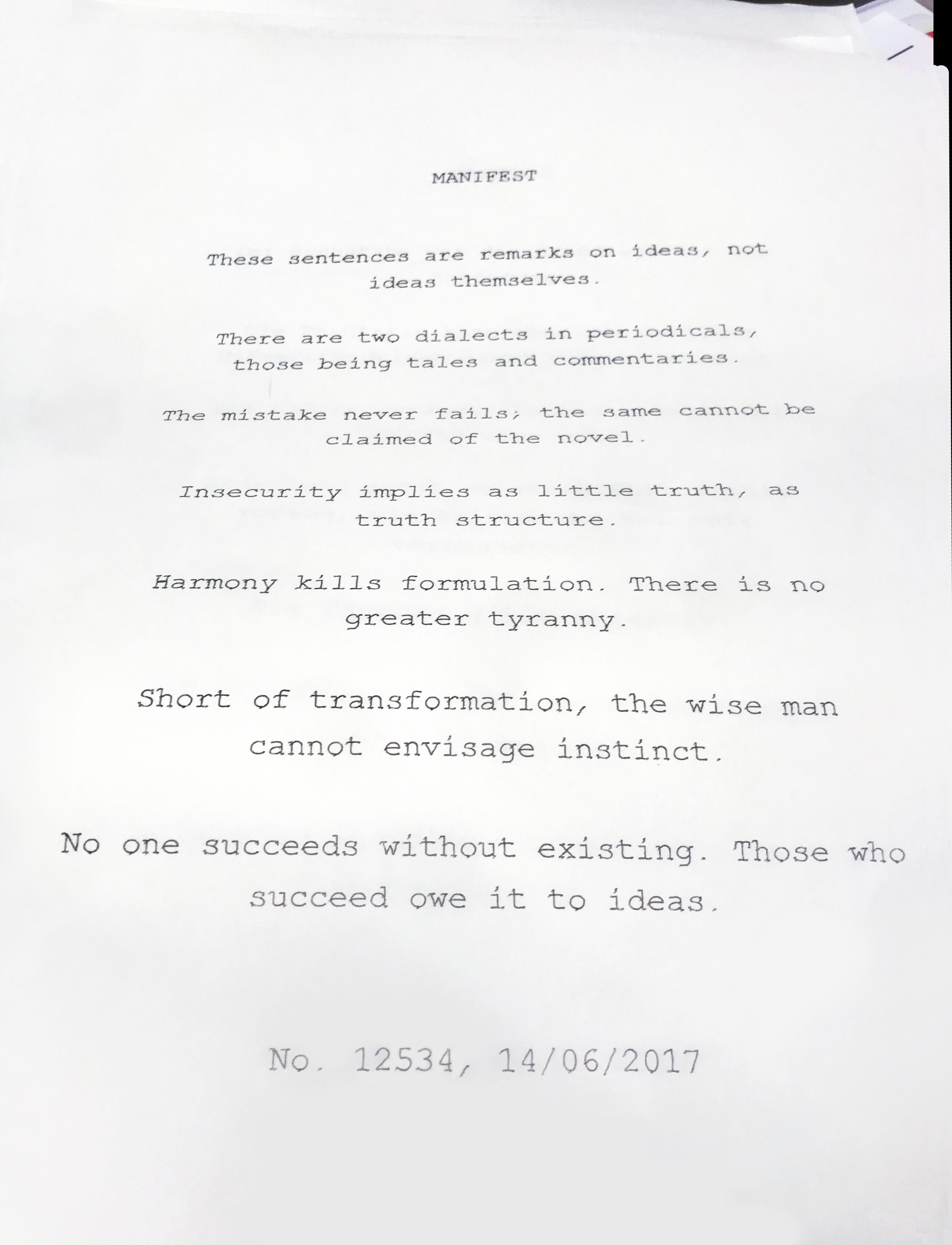

The exhibition »Uncanny Values « from 2019 brought together various works of art that shed light on the collaboration between humans and machines or artificial intelligence. We wanted to look at how artists work with AI, how they would present these topics to our audience, perhaps make them a bit more tangible than in, say, a technical article. The title is derived from the so-called »Uncanny Valley«, a theory to describe the acceptance of technology by people. The more something resembles a human being, the more uncanny we find it, for example, prosthetic arms, zombies, or even very humanoid- looking robots. In contrast, humanoid robots that are still recognizable as machines have good acceptance ratings. A very interesting subject is the question of why automation is happening and whom it serves. In the 2017 exhibition »Hello, Robot. Design between Human and Machine«, in cooperation with Vitra Design Museum, we had a KUKA arm at the exhibition that wasn’t doing what it was intended to do, but writing manifestos instead. Of course, there were artists behind it who fed relevant vocabulary into the machine. We thought it was very nice that our visitors were able to take something home with them. One of the manifestos read: »No one succeeds without existing. Those who succeed owe it to ideas.« The theme of the exhibition was captured very well here: If human labor is automated, what part of it will remain? And what would we still like to do ourselves?

Krüger:

The topic of »human-centered automation« is particularly important to us at PTZ, also in the context of digital humanism and ethical issues. In welding processes for the automotive industry, we have achieved automation levels of 96 to 98 percent using the path welding process. Here, people are really only there to observe and control the process. On the other hand, however, we still often find processes, particularly assembly processes, that are still being done by people in purely manual labor, especially in Asia. Complex fine-motor processes that also require tactile skills, such as when inserting components. Between these two extremes there is a very large area, the socalled »automation technology continuum «, which has hardly been tapped by research to date. Here, we need special forms of artificial intelligence to make robots more flexible than they are today. For example, we often talk about high-mix lowvolume production: We can build cars in 1014 or even 1024 combinations today. In theory, we could probably build more different cars than there are grains of sand on Earth. With so many individual configurations, a fixed programmed sequence no longer does the trick, especially in assembly. This is where human flexibility is irreplaceable, and we provide the best possible support with our technical solutions. One example of such a development are robots that help humans to assemble windshields without having to exert great force. Or the softrobotic exosuit PowerGrasp, which provides support for activities such as overhead bolting. With the help of acceleration sensors, the robot inside the textile vest can detect the intended movement and also the degree of fatigue and thus control the built-in pneumatics to activate ergonomic power assistance with the help of compressed air. We believe that such soft robotics are best suited to humans. We are trying to fill the continuum between purely manual labor and full automation with intelligent solutions, so that human beings can remain in control while being supported by robots. The form of intelligence that we find in collaborative robots today, I would estimate at an IQ of 60. We have a lot of work to do there – because who would really want to collaborate with a colleague who has an IQ of 60? Psychological effects also come into play here, such as the workers' understandable fear of a fast-moving robot.

Nickolay: Ms. Wirth, your projects also raise ethical questions about automation, for example when it comes to facial recognition algorithms. Is there a line being crossed here?

Wirth:

The cui bono question is very relevant in this: What is something used for and whom does it serve? Does automation serve working people, who can stay healthy and fit longer and enjoy their free time with their families? The sectors that make use of a lot of automation are predominantly growth-oriented industries. What are the underlying values with which this technology is being used? To address your example: Facial recognition can of course be super helpful, for things like access systems in buildings. But as soon as it is used by the state against its own fellow citizens in protests as we saw with the Chinese government, it becomes problematic. Technology per se is never bad. The question is, in whose hands is it, how do we deal with it, and where do we want to go with it?

»We are trying to fill the continuum between purely manual labor and full automation with intelligent solutions, so that human beings can remain in control while being supported by robots.«

– Prof. Dr.-Ing. Jörg Krüger

Krüger:

In none of our research projects do we get around the so-called ELSI issues: ethical, legal and social implications. Especially when we have robots and their sensors in close contact to the human body, we also collect personal data. How do we want to deal with that? Are workers willing to have this data collected in order to benefit from the advantages of these technologies? For example, if we use acceleration sensors to record fatigue to potentially help workers, we could also potentially derive findings about their overall performance. That's where things start to get critical.

Rapid progress is being made in fundamental AI research, partly due to the huge amount of data available to us through social media and the like. But what we are seeing at the same time is that the gap between what is being achieved in fundamental research and what is being applied in industry is widening every day. The problem is, if a factory’s production is supposed to be driven by AI – who will take responsibility? Those who could do it are mostly men my age without any deeper knowledge or understanding of AI methods. Now they are supposed to sign off that their AI-controlled production line is to produce thousands of cars per day – how are they supposed to assess that? As long as we have this gap, we will have a big problem harnessing the potential of AI in production. We would have to put at least as much effort and resources into empowering the people who are supposed to use AI as we do into fundamental research methods. This is where experiments like the ones artists do can offer a different perspective on how AI works.

Wirth:

To circle back to the question of how the collected data are being used: In the exhibition »Hello, Robot« we showed a project by the London-based studio Superflux, who designed care tools – fictional ones in this case, but some of them could be real. For example, a smart walking stick that reminds the user to take his evening walk. A smart fork that reminds him to eat properly. A smart pillbox that monitors medication administration. They made a video about an elderly man coming up with all kinds of ideas to escape this surveillance. His family calls him: »You haven't walked around the block today! You ate bratwurst instead of zucchini! What's wrong?« So he gives the neighbor boy some cash and sends him on a walk with the cane. He sticks the smart fork in the zucchini while he continues to eat the bratwurst. And about the pillbox, there is a note in the credits that insurance companies will only be covering medications upon proof that they are being used for the right purpose. Again, the question is: Who gets this information, why is it needed? In the case of the factory worker, the data may only be fed into the system, but not be accessible to supervisors or colleagues.

Nickolay: The use of robots in nursing will also be a very interesting field. There is an enormous demand. On the other hand, there are plenty of clues that people miss the tactile and emotional aspects of care.

Krüger:

In contrast to machine vision, machine sensing is still in its infancy. How do robots hold an elderly person by the rib cage without breaking their ribs? We are still a long way from being able to transfer these tactile skills.

Wirth:

Support for the heavy physical work of nursing is absolutely relevant, but there is no substitute for the empathy of the people providing this care. One of my exhibition projects in 2017 was called »Artificial Tears.« There are artificial tears to moisten the eyes. However, real tears do not only serve this purpose. When someone is crying, opioids are released to calm the person down. Crying is a highly complex process that cannot be automated. In ancient Greece, there were wailing women who could be hired to mourn publicly, but the work of grieving cannot be taken away from you. I always like to use that as a comparison when thinking about what can be automated and what we will be left to do, think and feel, even if we get machines to support us.

Krüger:

The whole thing is also a cultural question. We know that Japanese culture, for example, is very robot-friendly. The use of robotic animals to entertain people in care facilities has worked much better there. On the other hand, Toyota only has an automation level of eight percent in its assembly line, because there are smart people there who have also partially scaled back automation. One of these smart people is Mitsuru Kawai, who said that only people can improve processes. That's why people are the focus at Toyota. Because improving processes is a practice of creative learning that we may be able to automate at some point, but I don't believe that will happen in the foreseeable future.

Wirth:

I don't think so either. I do believe that we need to talk about the concept of creativity, which people like to attribute to us working in the cultural sector. But it applies to all areas of life, in every company, in every work process you need creativity. Every one of us has it, it just depends on the task at hand. In recent years, management consultants have repeatedly pointed out that more diverse teams are better at improving processes and solving problems. I find that a very interesting input for the zeitgeist – also with regard to cooperation between humans and robots.

Privacy warning

With the click on the play button an external video from www.youtube.com is loaded and started. Your data is possible transferred and stored to third party. Do not start the video if you disagree. Find more about the youtube privacy statement under the following link: https://policies.google.com/privacyThis is the abridged and edited version of the fourth panel discussion in the series »Science and Culture in Conversation« organized by the Austrian Cultural Forum Berlin and Fraunhofer IPK. The conversation on »Human-centered Automation« took place at PTZ Berlin on September 14, 2022, moderated by Dr. Bertram Nickolay, expert in machine vision and former department head at Fraunhofer IPK. The recording of the entire event is available as a video here [German only].

Marlies Wirth

curates exhibitions at the MAK – Museum of Applied Arts in Vienna in the fields of art, design, architecture and technology, such as the group exhibitions »Artificial Tears« and »Uncanny Values«. She is part of the curatorial team of the international traveling exhibition »Hello, Robot. Design between Man and Machine« and was co-director of the Global Art Forum 2018: »I Am Not a Robot« in Dubai and Singapore.

Prof. Dr.-Ing. Jörg Krüger

is managing director of the IWF at TU Berlin as well as head of the Automation Technology department at Fraunhofer IPK. His research focuses on human-centered and image-based automation. He and his teams develop control and robotic systems for human-robot collaboration and medical rehabilitation, as well as methods and applications of machine vision for object and position recognition in production.

Fraunhofer Institute for Production Systems and Design Technology

Fraunhofer Institute for Production Systems and Design Technology