A Smart Helpdesk

Generative artificial intelligence (genAI) has found its way into the working world – whether it is among software developers who get ChatGPT to help them write code, specialists in production for whom AI-supported search engines provide solutions to their daily problems, or designers who find inspiration in the form of AI-generated images.

These real-world developments inevitably confront companies with questions of how to position themselves in relation to genAI technologies and how to utilize them. Most are still at the very beginning, some have launched initial pilot projects – but concrete objectives and potential application scenarios are often still unclear. In addition, many companies have ethical and legal concerns and legitimate questions about data protection, copyright and the protection of intellectual property (IP).

Researchers at IWF at TU Berlin and Fraunhofer IPK are therefore jointly working on solutions for deploying genAI models that are tailored for use in companies. Their research and development is specifically aimed at actual questions and problems in company-internal knowledge management: How can genAI support companies in making specific domain knowledge more easily accessible to their employees? How can this be done securely and protecting IP? How can the use of genAI be embedded in the company strategy? And finally, how can employees be motivated to accept and use an AI-based knowledge management system?

The focus of the R & D activities is a chat prototype that is based on self-hosted large language models (LLMs) and can act as the company’s own chatbot, autonomously generating written replies and content to answer questions or suggestions from employees. In the future, this chatbot should make it easy to access knowledge that is available within a given company. In addition to technical issues, the team at TU Berlin and Fraunhofer IPK is also researching aspects of organizational development, such as the cultural acceptance and necessary skills of employees, in order to use the chat prototype effectively. The goal is a holistic solution that integrates both technological and organizational development. This way, genAI technologies are strategically combined with human expertise so that they serve as tools to complement human intelligence.

Focus on IP protection

As part of the »ProKI« project, researchers at IWF at TU Berlin are investigating how LLMs can be used in conjunction with so-called retrieval algorithms to map companies’ domain knowledge and make it accessible to the company within a structured, self-contained and therefore secure framework. What ChatGPT and others can achieve on a large scale in the form of so-called foundational models, i.e. very large knowledge models, can also be developed in a smaller context specifically for individual companies.

Self-hosted LLMs allow for structured and protected access to a knowledge base, even when the amounts of available written knowledge are relatively small, for example from technical documentation such as operating instructions, maintenance logs, checklists or other company sources. It is precisely this company-specific knowledge, or domain knowledge, that is the companies’ intellectual capital and therefore mission-critical. The main advantage of the prototype developed at IWF is that this domain knowledge in its various forms can now be accessed through simple communication in everyday language, both spoken and written, and even in the form of images. Through the self-hosted in-house structure, organization-specific requirements concerning proprietary information and intellectual property can be met.

Friendships in the vector space

The researchers are developing different LLM-based chat prototypes that allow employees to interact securely with domain-specific knowledge. The aim is for this interaction to take place entirely within the boundaries of the local network with locally stored LLMs. For this purpose, different tools of natural language processing (NLP), a discipline that deals with understanding, processing and generating natural language by machines, are combined in a pipeline. This pipeline is known as retrieval augmented generation (RAG) and combines the strengths of generative and query-based AI.

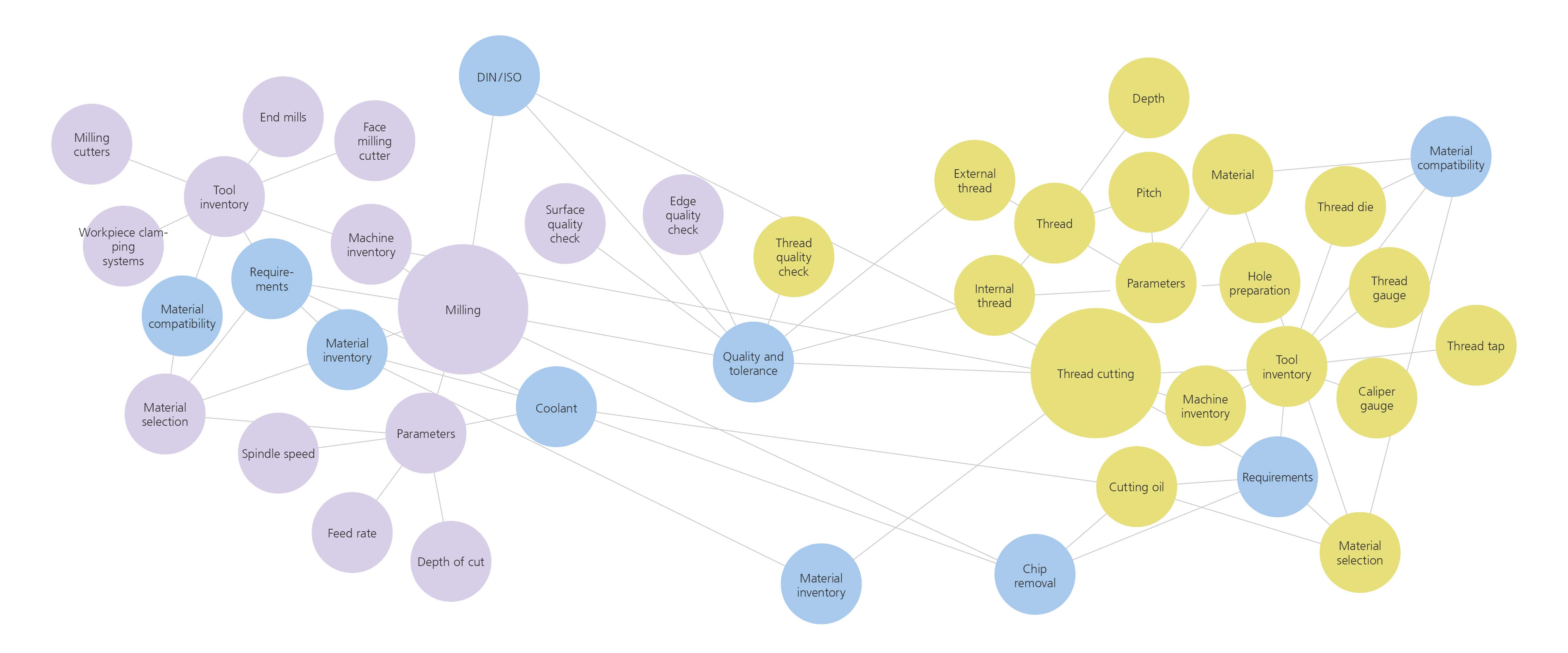

First, a method called embedding is used to condense the semantic meaning of the database, e.g. operating instructions for milling machines. This technique represents the embedded texts as dense vectors in a high-dimensional vector space, which brings similar content closer together. This is similar to a social network in which words or phrases become »friends« with other words if they frequently interact with each other. Words and phrases with similar meanings or usages are close friends, such as »milling« and »machining«. By recognizing these stochastic-syntactic patterns, embedding models help to use words and phrases in a context-related way, based on the domain knowledge fed into the system. So, if someone asks the chat prototype a question, it is first placed in the domain-specific context, for example the vector space for operating milling machines, using the pre-trained embedding model. Finally, a self-hosted LLM is integrated into the pipeline, which uses the context gained from the query algorithm to provide information-rich answers in everyday language.

More than just technology

Modern AI solutions unlock enormous potential to support systematic knowledge handling, optimizing the performance of business processes and contributing to achieving corporate objectives. However, the experience of the knowledge management experts at Fraunhofer IPK shows: It is not enough to simply provide a new technology. Instead, a comprehensive view of the entire organization is required to ensure successful implementation of AI-based knowledge management. Companies should therefore clearly define at an early stage which knowledge management objectives they want to pursue with the introduction of genAI technologies. Employees must also be trained in the use of genAI to make sure they are able to use the technology effectively. In addition to knowledge about the fundamentals of AI, ethical and legal aspects, media competence and technical skills such as prompting, must also be taken into account.

AI can offer support with current challenges in knowledge management such as intergenerational knowledge transfer. This may include systematic onboarding as well as recording and preserving the know-how of long-standing experts when they leave the company. The LLM-based chat prototype is supposed to help with these tasks in the future.

As a »digital colleague«, the chat prototype can be an excellent first point of contact for new employees. By analyzing the company’s expansive data base, the chat prototype will also be able to identify the right contact persons with expertise on specific issues, thus also promoting the offline knowledge transfer between employees.

Fraunhofer Institute for Production Systems and Design Technology

Fraunhofer Institute for Production Systems and Design Technology